Deep Science AI joins Defendry to automatically detect crimes on camera

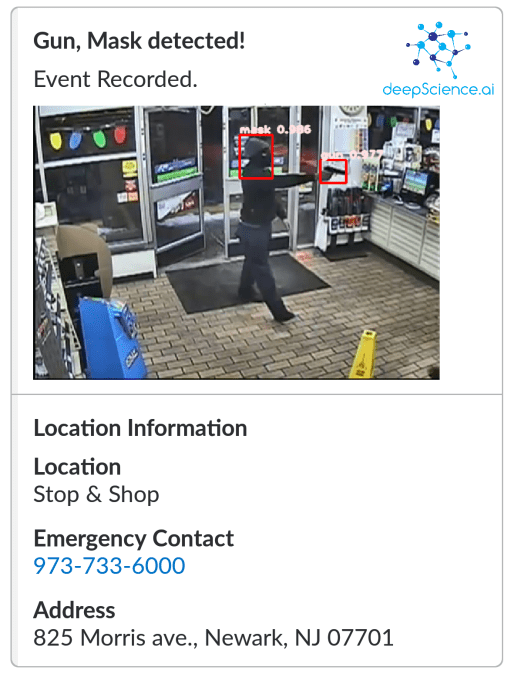

Deep Science AI made its debut on stage at Disrupt NY 2017, showing in a live demo how its computer vision system could spot a gun or mask in CCTV footage, potentially alerting a store or security provider to an imminent crime. The company has now been acquired in a friendly merger with Defendry, which is looking to deploy the tech more widely.

It’s a great example of a tech-focused company looking to get into the market, and a market-focused company looking for the right tech.

The idea was that if you have a chain of 20 stores, and 3 cameras at each store, and people can only reliably keep an eye on 8-10 feeds at a time, you’re looking at a significant personnel investment just to make sure those cameras aren’t pointless. If instead you used Deep Science AI’s middle layer that highlighted shady situations like guns drawn, one person could conceivably keep an eye on hundreds of feeds. It was a good pitch, though they didn’t take the cup that year.

“The TechCrunch battlefield was a great launching off point as far as getting our name and capabilities out there,” said Deep Science AI co-founder Sean Huver in an interview (thanks for the plug, Sean). “We had some really large names in the retail space request pilots. But we quickly discovered that there wasn’t enough in the infrastructure as far as what actually happens next.”

That is to say, things like automated security dispatch, integration with private company servers and hardware, that sort of thing.

“You really need to build the monitoring around the AI technology rather than the other way around,” Huver admitted.

Meanwhile, Pat Sullivan at Defendry was working on establishing automated workflows for internet of things devices — from adjusting the A/C if the temperature exceeds certain bounds to, Sullivan realized at some point, notifying a company of serious problems like robberies and fires.

Meanwhile, Pat Sullivan at Defendry was working on establishing automated workflows for internet of things devices — from adjusting the A/C if the temperature exceeds certain bounds to, Sullivan realized at some point, notifying a company of serious problems like robberies and fires.

“One of the most significant alerts that could take place is someone has a gun and is doing something bad,” he said. “why can’t our workflows kick off an active response to that alert, with notifications and tasks, etc? That led me to search for a weapons and dangerous situations dataset, which led me to Sean.”

Although the company was still only in prototype phase when it was on stage, the success of its live demo with a team member setting off an alert in a live feed (gutsy to attempt this) indicated that it was actually functional — unlike, as Sullivan discovered, many other companies advertising the same thing.

“Everyone said they had the goods, but when you evaluated, they really didn’t,” he opined. “Almost all of them wanted to build it for us — for a million dollars. But when we came across Deep Science we were thrilled to see that they actually could do what they said they could do.”

Ideally, he went on to suggest, the system could be not just an indicator of crimes in progress but crimes about to begin: a person donning a mask or pulling out a gun in a parking lot could trigger exterior doors to lock, for instance. And when a human checks in, either the police could be on their way before the person reaches the entrance, or it could be a false positive and the door could be unlocked before anyone even noticed anything had happened.

Now, one part of the equation that’s mercifully not necessarily relevant here is that of bias in computer vision algorithms. We’ve seen how women and people of color — to start — are disproportionately affected by error, misidentification, and so on. I asked Huver and Sullivan if these issues were something they had to accommodate.

Now, one part of the equation that’s mercifully not necessarily relevant here is that of bias in computer vision algorithms. We’ve seen how women and people of color — to start — are disproportionately affected by error, misidentification, and so on. I asked Huver and Sullivan if these issues were something they had to accommodate.

Luckily this tech doesn’t rely on facial analysis or anything like that, they explained.

“We’re really stepping around that issue because we’re focusing on very distinct objects,” said Huver. “There’s behavior and motion analysis, but the accuracy rates just aren’t there to perform at scale for what we need.”

“We’re not keeping a list of criminals or terrorists and trying to match their face to the list,” added Sullivan.

The two companies talked licensing but ultimately decided they’d work best as a single organization, and just a couple weeks ago finalized the paperwork. They declined to detail the financials, which is understandable given the hysteria around AI startups and valuations.

They’re working together with Avinet, a security hardware provider that will essentially be the preferred vendor for setups put together by the Defendry team for clients and has invested an undisclosed amount in the partnership. We’ll be following the progress of this Battlefield success story closely.