Google Cloud gets support for Nvidia’s Tesla P4 inferencing accelerators

These days, no cloud platform is complete without support for GPUs. There’s no other way to support modern high-performance and machine learning workloads without them, after all. Often, the focus of these offerings is on building machine learning models, but today, Google is launching support for the Nvidia P4 accelerator, which focuses specifically on inferencing to help developers run their existing models faster.

In addition to these machine learning workloads, Google Cloud users can also use the GPUs for running remote display applications that need a fast graphics card. To do this, the GPUs support Nvidia Grid, the company’s system for making server-side graphics more responsive for users who log in to remote desktops.

Since the P4s come with 8GB of DDR5 memory and can handle up to 22 tera-operations per second for integer operations, these cards can handle pretty much anything you throw at them. And since buying one will set you back at least $ 2,200, if not more, renting them by the hour may not be the worst idea.

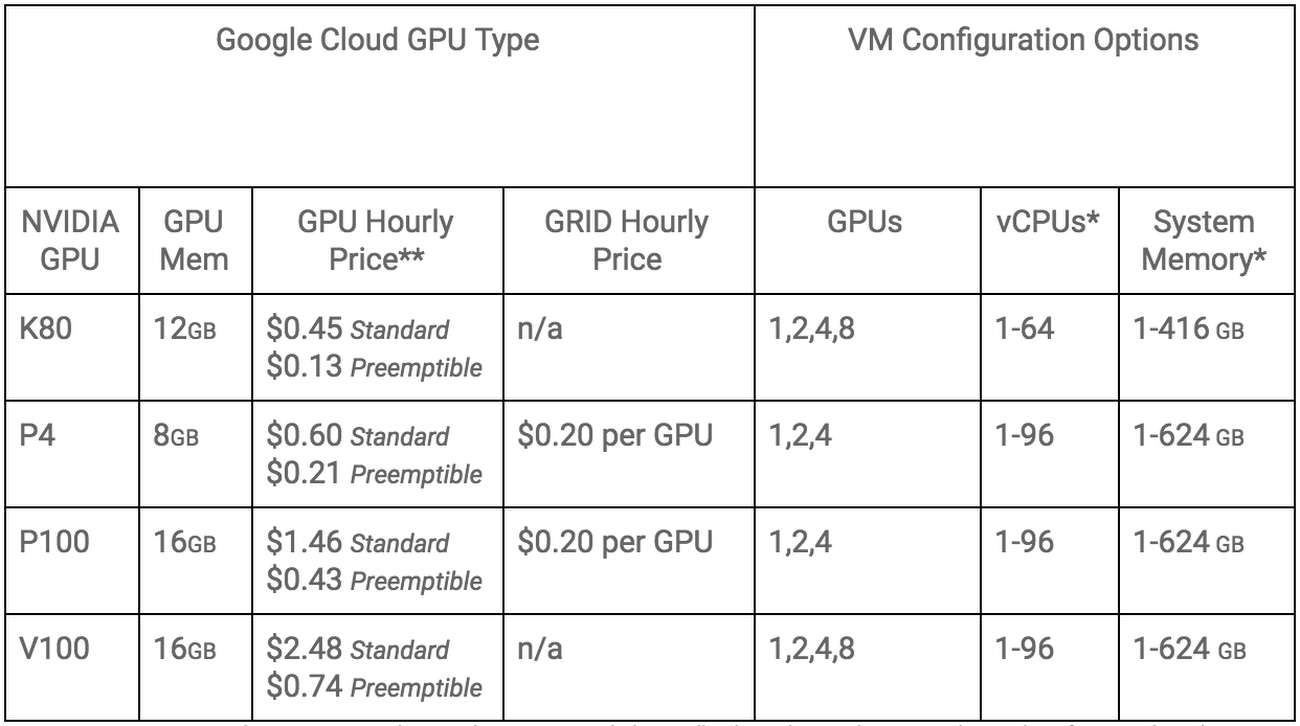

On the Google Cloud, the P4 will cost $ 0.60 per hour with standard pricing and $ 0.21 per hour if you’re comfortable with running a preemptible GPU. That’s significantly lower than Google’s prices for the P100 and V100 GPUs, though we’re talking about different use cases here, too.

The new GPUs are now available in us-central1 (Iowa), us-east4 (N. Virginia), Montreal (northamerica-northeast1) and europe-west4 (Netherlands), with more regions coming soon.