The new maker toolkit: IoT, AI and Google Cloud Platform

Voice interaction is everywhere these days—via phones, TVs, laptops and smart home devices that use technology like the Google Assistant. And with the availability of maker-friendly offerings like Google AIY’s Voice Kit, the maker community has been getting in on the action and adding voice to their Internet of Things (IoT) projects.

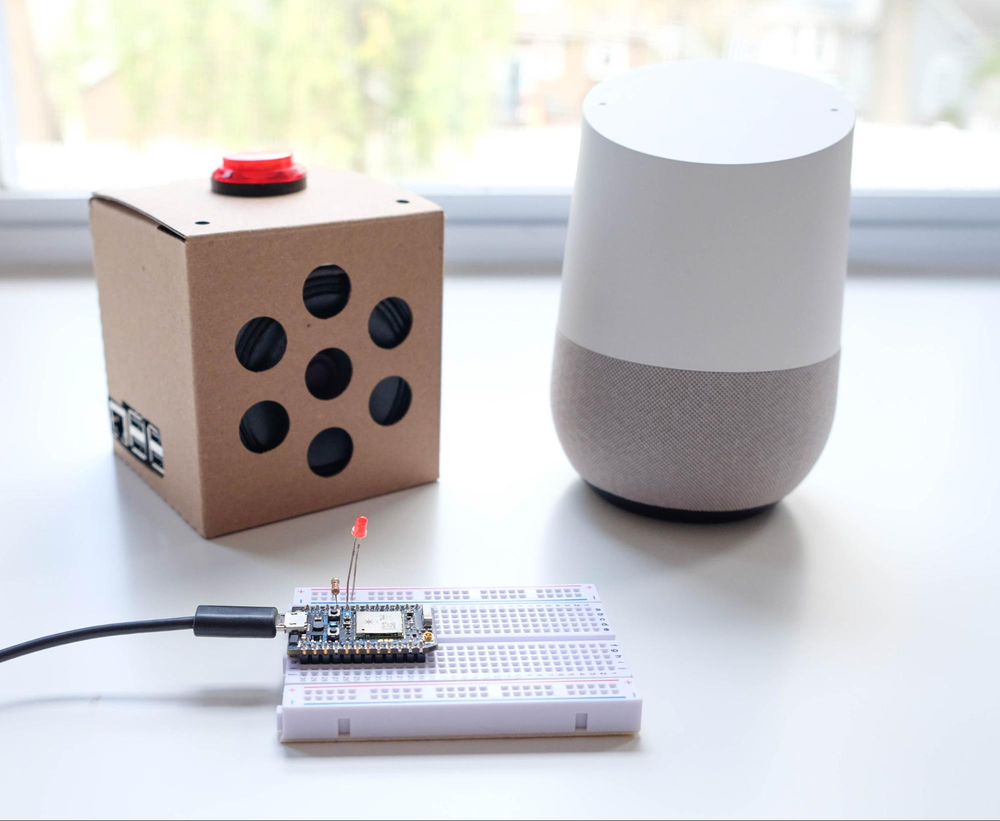

As avid makers ourselves, we wrote an open-source, maker-friendly tutorial to show developers how to piggyback on a Google Assistant-enabled device (Google Home, Pixel, Voice Kit, etc.) and add voice to their own projects. We also created an example application to help you connect your project with GCP-hosted web and mobile applications, or tap into sophisticated AI frameworks that can provide more natural conversational flow.

Let’s take a look at what this tutorial, and our example application, can help you do.

Particle Photon: the brains of the operation

The Photon microcontroller from Particle is an easy-to-use IoT prototyping board that comes with onboard Wi-Fi and USB support, and is compatible with the popular Arduino ecosystem. It’s also a great choice for internet-enabled projects: every Photon gets its own webhook in Particle Cloud, and Particle provides a host of additional integration options with its web-based IDE, JavaScript SDK and command-line interface. Most importantly for the maker community, Particle Photons are super affordable, starting at just $ 19.

Connecting the Google Assistant and Photon: Actions on Google and Dialogflow

The Google Assistant (via Google Home, Pixel, Voice Kit, etc.) responds to your voice input, and the Photon (through Particle Cloud) reacts to your application’s requests (in this case, turning an LED on and off). But how do you tie the two together? Let’s take a look at all the moving parts:

-

Actions on Google is the developer platform for the Google Assistant. With Actions on Google, developers build apps to help answer specific queries and connect users to products and services. Users interact with apps for the Assistant through a conversational, natural-sounding back-and-forth exchange, and your Action passes those user requests on to your app.

-

Dialogflow (formerly API.AI) lets you build even more engaging voice and text-based conversational interfaces powered by AI, and sends out request data via a webhook.

-

A server (or service) running Node.js handles the resulting user queries.

Along with some sample applications, our guide includes a Dialogflow agent, which lets you parse queries and route actions back to users (by voice and/or text) or to other applications. Dialogflow provides a variety of interface options, from an easy-to-use web-based GUI to a robust Node.js-powered SDK for interacting with both your queries and the outside world. In addition, its powerful machine learning tools add intelligence and natural language processing. Your applications can learn queries and intents over time, exposing even more powerful options for making and providing better results along the way. (The recently announced Dialogflow Enterprise Edition offers greater flexibility and support to meet the needs of large-scale businesses.)

Backend infrastructure: GCP

It’s a no-brainer to build your IoT apps on a Google Cloud Platform (GCP) backend, as you can use a single Google account to sign into your voice device, create Actions on Google apps and Dialogflow agents, and host the web services. To help get you up and running, we developed two sample web applications based on different GCP technologies that you can use as inspiration when creating a voice-powered IoT app:

-

Cloud Functions for Firebase. If your goal is quick deployment and iteration, Cloud Functions for Firebase is a simple, low-cost and powerful option—even if you don’t have much server-side development experience. It integrates quickly and easily with the other tools used here. Dialogflow, for example, now allows you to drop Cloud Functions for Firebase code directly into its graphical user interface.

-

App Engine. For those of you with more development experience and/or curiosity, App Engine is just as easy to deploy and scale, but includes more options for integrations with your other applications, additional programming language/framework choices, and a host of third-party add-ons. App Engine is a great choice if you already have a Node.js application to which you want to add voice actions, you want to tie into more of Google’s machine learning services, or you want to get deeper into device connection and management.

Next steps

As makers, we’ve only just scratched the surface of what we can do with these new tools like IoT, AI and cloud. Check out our full tutorials, and grab the code on Github. With these examples to build from, we hope we’ve made it easier for you to add voice powers to your maker project. For some extra inspiration, check out what other makers have built with AIY Voice Kit. And for even more ways to add machine learning to your maker project, check out the AIY Vision Kit, which just went on pre-sale today.

We can’t wait to see what you build!